Install Python34 Library

How do I install Python Imaging Library? First you should run this sudo apt-get build-dep python - imaging which will give you all the dependencies that you might need. Then run sudo apt-get update && sudo apt-get -y upgrade. Followed by sudo apt-get install python - pip. And then finally install Pil pip install pillow. Click to see full answer. Python has a tool to download and install the library from PyPi. If you are still in the python prompt, exit by typing exit To install a new library that you just need to type the following command: python -m pip install namelibrary.

🤗 Transformers is tested on Python 3.6+, and PyTorch 1.1.0+ or TensorFlow 2.0+.

You should install 🤗 Transformers in a virtual environment. If you’reunfamiliar with Python virtual environments, check out the user guide. Create a virtual environment with the version of Python you’re goingto use and activate it.

Now, if you want to use 🤗 Transformers, you can install it with pip. If you’d like to play with the examples, youmust install it from source.

Installation with pip¶

First you need to install one of, or both, TensorFlow 2.0 and PyTorch.Please refer to TensorFlow installation page,PyTorch installation page and/orFlax installation pageregarding the specific install command for your platform.

When TensorFlow 2.0 and/or PyTorch has been installed, 🤗 Transformers can be installed using pip as follows:

Alternatively, for CPU-support only, you can install 🤗 Transformers and PyTorch in one line with:

or 🤗 Transformers and TensorFlow 2.0 in one line with:

or 🤗 Transformers and Flax in one line with:

To check 🤗 Transformers is properly installed, run the following command:

It should download a pretrained model then print something like

(Note that TensorFlow will print additional stuff before that last statement.)

Installing from source¶

Here is how to quickly install transformers from source:

Note that this will install not the latest released version, but the bleeding edge master version, which you may want to use in case a bug has been fixed since the last official release and a new release hasn’t been yet rolled out.

While we strive to keep master operational at all times, if you notice some issues, they usually get fixed within a few hours or a day and and you’re more than welcome to help us detect any problems by opening an Issue and this way, things will get fixed even sooner.

Again, you can run:

to check 🤗 Transformers is properly installed.

Editable install¶

If you want to constantly use the bleeding edge master version of the source code, or if you want to contribute to the library and need to test the changes in the code you’re making, you will need an editable install. This is done by cloning the repository and installing with the following commands:

This command performs a magical link between the folder you cloned the repository to and your python library paths, and it’ll look inside this folder in addition to the normal library-wide paths. So if normally your python packages get installed into:

now this editable install will reside where you clone the folder to, e.g. ~/transformers/ and python will search it too.

Do note that you have to keep that transformers folder around and not delete it to continue using the transformers library.

Now, let’s get to the real benefit of this installation approach. Say, you saw some new feature has been just committed into master. If you have already performed all the steps above, to update your transformers to include all the latest commits, all you need to do is to cd into that cloned repository folder and update the clone to the latest version:

There is nothing else to do. Your python environment will find the bleeding edge version of transformers on the next run.

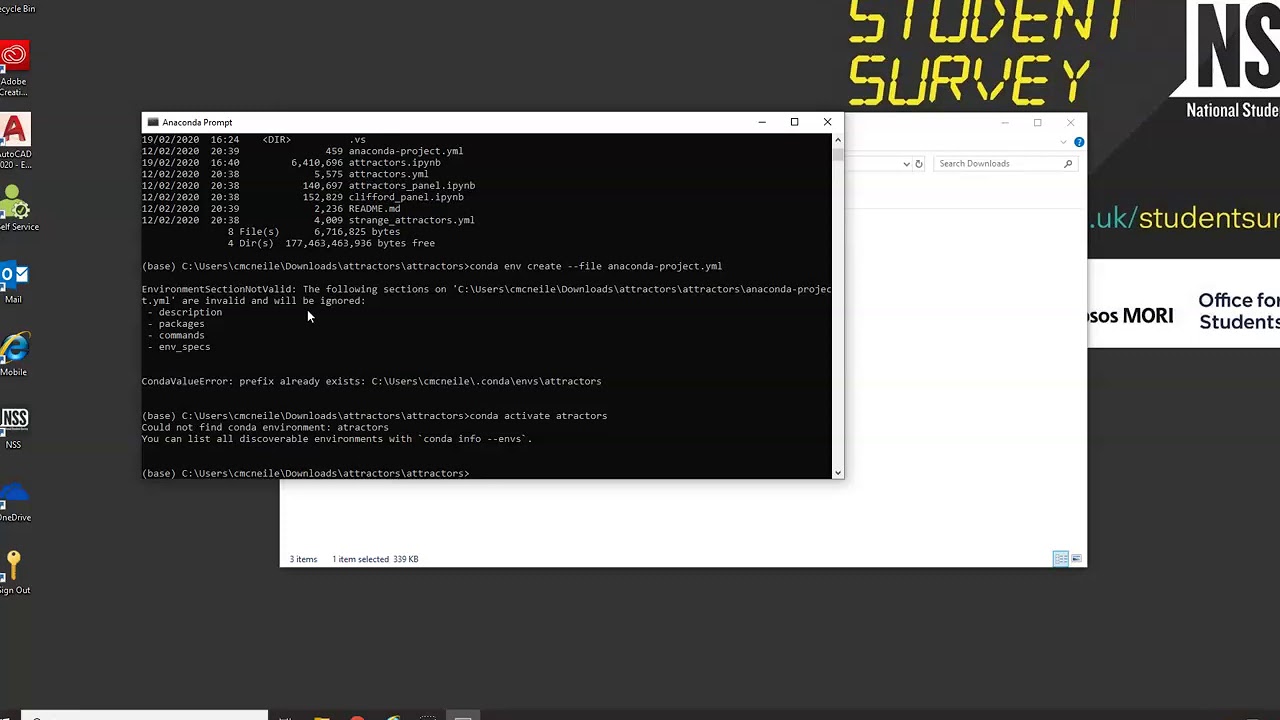

With conda¶

Since Transformers version v4.0.0, we now have a conda channel: huggingface.

🤗 Transformers can be installed using conda as follows:

Follow the installation pages of TensorFlow, PyTorch or Flax to see how to install them with conda.

Caching models¶

This library provides pretrained models that will be downloaded and cached locally. Unless you specify a location withcache_dir=... when you use methods like from_pretrained, these models will automatically be downloaded in thefolder given by the shell environment variable TRANSFORMERS_CACHE. The default value for it will be the HuggingFace cache home followed by /transformers/. This is (by order of priority):

shell environment variable

HF_HOMEshell environment variable

XDG_CACHE_HOME+/huggingface/default:

~/.cache/huggingface/

So if you don’t have any specific environment variable set, the cache directory will be at~/.cache/huggingface/transformers/.

Note: If you have set a shell environment variable for one of the predecessors of this library(PYTORCH_TRANSFORMERS_CACHE or PYTORCH_PRETRAINED_BERT_CACHE), those will be used if there is no shellenvironment variable for TRANSFORMERS_CACHE.

Offline mode¶

It’s possible to run 🤗 Transformers in a firewalled or a no-network environment.

Setting environment variable TRANSFORMERS_OFFLINE=1 will tell 🤗 Transformers to use local files only and will not try to look things up.

Most likely you may want to couple this with HF_DATASETS_OFFLINE=1 that performs the same for 🤗 Datasets if you’re using the latter.

Here is an example of how this can be used on a filesystem that is shared between a normally networked and a firewalled to the external world instances.

Visual Studio Code Install Python Library

On the instance with the normal network run your program which will download and cache models (and optionally datasets if you use 🤗 Datasets). For example:

Install Python Library Mac

and then with the same filesystem you can now run the same program on a firewalled instance:

and it should succeed without any hanging waiting to timeout.

Fetching models and tokenizers to use offline¶

When running a script the first time like mentioned above, the downloaded files will be cached for future reuse.However, it is also possible to download files and point to their local path instead.

Downloading files can be done through the Web Interface by clicking on the “Download” button, but it can also be handledprogrammatically using the huggingface_hub library that is a dependency to transformers:

Using

snapshot_downloadto download an entire repositoryUsing

hf_hub_downloadto download a specific file

See the reference for these methods in the huggingface_hubdocumentation.

Do you want to run a Transformer model on a mobile device?¶

You should check out our swift-coreml-transformers repo.

It contains a set of tools to convert PyTorch or TensorFlow 2.0 trained Transformer models (currently contains GPT-2,DistilGPT-2, BERT, and DistilBERT) to CoreML models that run on iOS devices.

At some point in the future, you’ll be able to seamlessly move from pretraining or fine-tuning models in PyTorch orTensorFlow 2.0 to productizing them in CoreML, or prototype a model or an app in CoreML then research itshyperparameters or architecture from PyTorch or TensorFlow 2.0. Super exciting!

2. Python library installation : step by step guide

Step 1 : verify that you can access Python in command line

Open a terminal : use the search function of Windows,

then type command, then click on prompt command. A terminal window opens.

Then type python in minuscule.

If the following shows, then you're good. If not, you need to review the installation of Python.

Step 2 : localize the package you need with PyPi

Python has a kind of app store for the different libaries available. It is called PyPi. Just go 1st to PyPi to get the exact name of the package you wish to install.

For example, you want to install numpy. Go to pypi.org and type numpy in the search box.

It is available, you can download it thanks to the install tool given by Python, named pip.

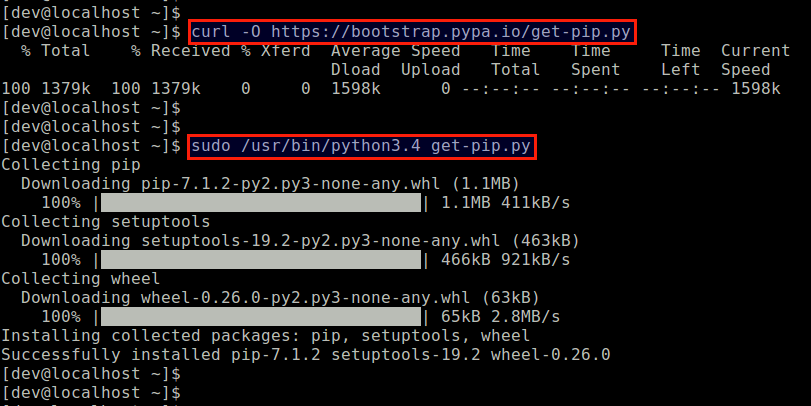

Install Python Library Linux

Step 3 : install the library with pip

Python has a tool to download and install the library from PyPi.

If you are still in the python prompt, exit by typing exit()

To install a new library that you just need to type the following command :

Install Python Library In Pycharm

python -m pip install namelibrary

Replace namelibrary by what you want to install, in our case, numpy

python -m pip install numpy

Step 4 : enjoy your new library

The new library is now available.

Note that it can in some cases be more complicated due to some dependencies in between libraries although pip is able to manage some and install other packages that may be needed by the library you wish to use, but for the most common ones it should be sufficient.